Networking delays - What to do about them

In our previous article we discussed the different aspects that contribute to the overall time delays in networking communications. In this article we will focus on how to reduce them and how to cope with them. We will discuss the different solutions for streaming applications.

Why are time delays a problem for video streaming:

As you can imagine, too much delay between a person to person stream does not help in communicating. Furthermore for some applications we want to provide the user that is streaming a video with feedback. If the time delay is too big the user cannot use this feedback. So lets look at some numbers.

For a 1-on-1 stream acceptable time delays are around 150 ms one way which gives us a round trip time delay of 300 ms. So for streaming purposes where a 1 to 1 call between 2 people is made the total delay should be below 300 ms.

Acceptable time delays change when we want to provide feedback to the user. For example if a user is controlling something that he sees in the video and he/she needs feedback on his input. This aspect is called input lag, and user performance/satisfaction starts to decrease at around 50 ms round trip delay and decreases drastically at above 100 ms (See: Input lag: How important is it?)

So for our streaming applications where we want to provide feedback to the user we want our one way time delay to stay under 50 ms preferably.

Another issue that can arise from video streaming is that too much packet loss will cause the stream to be corrupted and thus the video data cannot be used anymore. This is caused by the decoding of the incoming video data that cannot be performed correctly and thus resulting in an unreadable stream or in a distorted image (like the image above).

We will now look at how we can reduce these delays for streaming applications (with user feedback). We will provide you with some DIY examples using your own PC with GStreamer.

Setup Gstreamer DIY environment

To run the different streaming test scenario’s in this article you will need to PC’s connected to the same network. One PC will be our source (it will need to have a webcam) The other PC will be our sink and will playback the video. You can also perform most tests using the same PC as the source and Sink PC, if you don't have 2 devices available. The latency effects will just be a little less noticeable.

On both PC perform the following commands to install GStreamer:

brew install gstreamer gst-plugins-good gst-plugins-bad gst-plugins-ugly gst-libav

Once GStreamer is installed you can test it by sending the video from your webcam on the source PC to the sink PC. Make sure you open a local network port of your choosing on the firewall of your sink PC and get the IP address of the sink PC (see sources down below for more information). Then run the following:

Source PC:

gst-launch-1.0 autovideosrc ! 'video/x-raw,width=640,height=480' ! jpegenc ! rtpjpegpay ! udpsink host=<sink-pc-ip> port=<chosen port>

Sink PC:

gst-launch-1.0 udpsrc port=5555 ! 'application/x-rtp, media=(string)video, payload=(int)26, clock-rate=(int)90000' ! rtpjpegdepay ! jpegdec ! videoconvert ! autovideosink

You should see a window appearing after some time displaying the video from your source PC on your sink PC. You might experience some delay in the video stream but this depends mostly on the encoding/decoding abilities of your hardware. We will discuss this more in details further on.

Now that we have our first stream up and running we will monitor how all the different aspects of time delay discussed in the previous article impact our stream.

Processing

First of all we must mention that video processing (like encoding and decoding) will generate time delays as well, unrelated to the networking delays. These delays are cause by the processing power required to encode and decode the video stream. It is difficult to send raw video over the internet as the bandwidth requirements are often too large. The delays caused by the processing of the video is related to the codecs that are used and to the video encoding/decoding processing power of the device that performs this process.

We will not consider this delay in this video and thus will keep the same video processing algorithms throughout our tests.

Communication link / Routing

The first aspect of our networking time delay while streaming a video that we are going to identify is the delay caused by the communication link and the routing of the data. In our previous article we saw that the transmission link and the amount of nodes in the network influences the time delay.

So to reduce the delay we want to optimise the link between the source and the sink. Ideally we would like a link with a high transmission speed (wired over wireless) and the least amount of nodes between them (as direct as possible)

So lets do some tests and see how this affects the delay in our stream:

Local network wireless connection over UDP:

To test the delay on a network connection with very few hops use the source and sink PC commands from the setup. Normally the delay you observe in the video (for example when you clap your hands in front of the camera) is minimal.

Source PC:

gst-launch-1.0 autovideosrc ! 'video/x-raw,width=640,height=480' ! jpegenc ! rtpjpegpay ! udpsink host=<sink-pc-ip> port=<chosen port>

Sink PC:

gst-launch-1.0 udpsrc port=5555 ! 'application/x-rtp, media=(string)video, payload=(int)26, clock-rate=(int)90000' ! rtpjpegdepay ! jpegdec ! videoconvert ! autovideosink

4G Internet connection over UDP:

Now we are going to increase the amount of hops between our source and our sink. To do this connect the source PC to a 4G mobile network hotspot using your phone. On the sink side we will have to enable port forwarding on our home router. Forward the chosen port (5555 in my example) to the ip address of your sink PC for UDP and on the source PC for TCP communications (see sources down below for more info). Also make sure this port is open on the firewall of your sink PC. Then check what your internet ip address is (whatsmyip.org).

Change the destination ip address on the source PC to your internet ip address.

Source PC:

gst-launch-1.0 autovideosrc ! 'video/x-raw,width=640,height=480' ! jpegenc ! rtpjpegpay ! udpsink host=<internet-ip> port=<chosen port>

Sink PC:

gst-launch-1.0 udpsrc port=5555 ! 'application/x-rtp, media=(string)video, payload=(int)26, clock-rate=(int)90000' ! rtpjpegdepay ! jpegdec ! videoconvert ! autovideosink

If everything is configured correctly you should see the video stream on the sink PC, and you should notice that the delay has increased.

To conclude for an optimal communication link with low latency we would prefer a wired connection over a WiFi or 4G connection. However, with the introduction of the 5G network, the wireless communication delays caused by the transmission link are greatly reduced (And no, corona infections are not increased as a side effect).

To further improve network delays for streaming try to create the most direct link possible between the source and the sink, in other words, create a direct route between them where the least amount of hops is required.

Packet loss

Now lets tackle the delays caused by packet loss! In our previous article we saw that packet loss is caused by the quality of the transmission link and the capacity of the transmission link.

To reduce these delays preferably use a wired transmission link as these links will have much less errors and thus much less packet losses. Second try to keep the bandwidth used below the capacity of the link to prevent bandwidth congestion and consequently packets being dropped.

Furthermore if some aspects prevent the use of a high quality, high bandwidth ,transmission link, one can consider to use the TCP protocol over the UDP protocol. The TCP protocol checks if packets have arrived at their destination and resents them if not. Thus completely reducing the packet loss to zero. However, as you can imagine, if there is a lot of packet loss in the network, using TCP over UDP communications will drastically increase the time delay as the TCP protocol constantly needs to resend the packets. So a careful consideration needs to be made between the transmission link quality and bandwidth, the bandwidth used by the stream and the protocol that is chosen.

Lets see how these different aspects influence the overall time delay of our stream so that you can test the different configurations on your own and make an informed decision.

4G connection with low bandwidth congestion over UDP

Source PC:

gst-launch-1.0 autovideosrc ! 'video/x-raw,width=640,height=480,framerate=30/1' ! jpegenc ! rtpjpegpay ! udpsink host=<internet-ip> port=<chosen port>

Sink PC:

gst-launch-1.0 udpsrc port=5555 ! 'application/x-rtp, media=(string)video, payload=(int)26, clock-rate=(int)90000, framerate=(fraction)30/1' ! rtpjpegdepay ! jpegdec ! videoconvert ! autovideosink

4G connection with low bandwidth congestion over TCP (The source PC must have the port forwarding enabled on the chosen port now)

Source PC:

gst-launch-1.0 autovideosrc ! 'video/x-raw,width=640,height=480,framerate=30/1' ! jpegenc ! tcpserversink host=0.0.0.0 port=<chosen port>

Sink PC:

gst-launch-1.0 tcpclientsrc host=<internet-ip> port=<chosen-port> ! 'image/jpeg,width=640,height=480,framerate=30/1' ! jpegdec ! videoconvert ! autovideosink

Now perform the same tests with the UDP and the TCP streaming, but now we will simulate a congested bandwidth to see the consequences. On both PC's run the following commands alongside the GStreamer commands. Open another port to perform a bandwidth test with IPerf that will congest the bandwidth.

On the PC with the new port forwarded:

iperf -s -u -p <new-chosen-port> -i 1

On the other PC:

iperf -c <internet-ip> -p <new-chosen-port> -u -b 300M

You probably will need to play a bit with the -b setting of the IPerf command, it sets the bandwidth that will be used, chose a value that is close to your maximum bandwidth.

What we can see is that for an un-congested bandwidth, the TCP stream is slightly more delayed, but this is barely noticeable. It gets interesting when we start congesting the bandwidth. For the TCP connection, the stream start to get more delayed the more the bandwidth is congested, up to a point where the stream is frozen as the TCP communication tries to resend all the lost packets. However, the UDP connection is more capable of handling a highly congested bandwidth. As the UDP protocol does not care about packet loss, it just continues sending data, with as a result that the video is a bit more delayed, and freezes shortly when there is too much packet loss for the decoder to correctly decode the stream.

To conclude here are some scenarios to consider:

- If bandwidth capacity is sufficient and the transmission link is of high quality, packet loss will be minimal, so UDP would be our best choice for a low latency stream.

- If bandwidth capacity is sufficient but the transmission link is of low quality, packet loss will be considerable, TCP will be your choice here to prevent corrupted data.

- If bandwidth capacity is insufficient but the transmission link is of high quality, packet loss will be considerable but using TCP will cause high delays. Try using a UDP connection and decreasing the used bandwidth on the link and the bandwidth used by the stream.

- If both bandwidth capacity is insufficient and the transmission link is of low quality, packet loss will be great. Try decreasing the bandwidth usage of the stream and other services on the link and use a TCP connection to prevent further packet loss, and thus prevent a corrupted stream.

Jitter

The last aspect we are gonna tackle in our stream communications is Jitter. As previously seen Jitter is caused by the delays in our network being variable. When jitter increases, the chances of ending up with a corrupted stream also increases.

There are 3 methods to solve jitter, the first is to decrease the overall traffic on our transmission link. Aside from decreasing packet loss as previously mentioned the variation in the delay will also decrease.

An other solution is to add a buffer on the sink side of the stream that synchronises the packets arriving and thus removing jitter. And the last solution is to use a TCP stream, the TCP protocol, besides handling packet loss, will also synchronise the stream to remove jitter. However, as you can imagine when we stock some stream data before using it, this will increase the time delay of our stream. A good consideration has to be made between the jitter remaining in the transmission link after optimising the traffic and between the length of the buffer used.

Lets try some practical configurations to see the impacts this has on our stream.

We are going to add a buffer to our UDP stream. To compare the differences launch a bandwidth congested UDP stream over the internet as in the previous section.

And then we are going to add a buffer to the sink PC streamer command, the video will have less freezes but will have more delay.

Source PC stream with buffer:

gst-launch-1.0 autovideosrc ! 'video/x-raw,width=640,height=480,framerate=30/1' ! jpegenc ! rtpjpegpay ! udpsink host=<internet-ip> port=<chosen port>

Sink PC stream with buffer:

gst-launch-1.0 udpsrc port=5555 ! 'application/x-rtp, media=(string)video, payload=(int)26, clock-rate=(int)90000, framerate=(fraction)30/1' ! rtpjitterbuffer ! rtpjpegdepay ! jpegdec ! videoconvert ! autovideosink

To conclude, the first step to tackle this challenge is to measure the jitter in the transmission link and identify if this is too high for a stable stream. Then try to resolve most of the jitter, you do not need to account for all delay variations, by adding an as small as possible buffer on the sink side.

It can be challenging to find the correct set of configurations to minimise the delays caused by the network for our stream. Optimising the transmission link is always a good idea before diving in to the methods and configuration options to solve the networking delays aspects.

Now it is up to you to set up the perfect smooth fast and reliable stream!

Thank you for reading!

Sources

IP address lookup: https://www.howtogeek.com/117371/how-to-find-your-computers-private-public-ip-addresses/ (29–01–2021)

Port forwarding: https://www.noip.com/support/knowledgebase/general-port-forwarding-guide/ (18–02–2021)

iPerf: https://iperf.fr/

Input lag: How important is it? https://www.cnet.com/news/input-lag-how-important-is-it/

GStreamer: https://gstreamer.freedesktop.org/ (18–02–2021)

Networking delays : A Hands-on experience

Did you ever found yourself in a situation where you are baffled by the time it takes for your data to travel? Aren’t radio signals, be it by wire or over the air, supposed to travel with the speed of light?

In this article we will dive under the hood of networking links to find out what is causing our data to be delayed. And you’ll be able to try measuring some delays in your network yourself.

Aspects

Delays in networks are caused by a variety of factors in a communication network. The most obvious one is simply the distance between the source and the destination. But our data does not travel directly from a source to a destination. It will pass by a lot of stations that will contribute to the delays. Some data may be lost on the way or the order of the data may be scrambled.

We will attempt to explain the different aspects of a network that will contribute to delays. You as reader can try to see some real life numbers to measure this delay.

Throughout this article I will mention two network communication protocols, UDP and TCP. Without going into the details of these protocols, it is good to know their main difference:

UDP: User Datagram Protocol is a protocol that sends data packets with a minimalist header and without any checks. Data packets are sent and that’s it.

TCP: Transmission Control Protocol is a protocol that sends packets and checks if they have arrived. If not it will resend the packet. This protocol has more overhead (more info in the header) to perform this control.

Latency

Latency in network communication refers to the time it takes for a packet to be send from one end of a communication link to the other. It is composed of 4 different aspects that each contribute to the overall delay.

Propagation delay is the time between the transmission of a data packet to the reception of this data packet. This time t [s] depends on the distance d [m] between the sender and the receiver and the speed v [m/s] of the transmission link: t = d/v

Transmission delay is the time it takes for a data packet, that is processed and ready to be sent, to be transmitted to the outgoing link. This time t [s] depends on the size of the packet S [bytes] and the transmission capacity (Bandwidth) C [bytes/s] of the outgoing link: t = S/C

Queuing delay is the time a data packet has to wait in the buffer of a transmission link before it is processed and sent. This time is dependent on the amount of incoming traffic, the capacity of the transmission link and the type of transmission protocol (UDP/TCP).

Processing delay is the time it takes for the transmission link to process the data packet. This time depends on the processing speed of the transmission link.

Adding all these time delays together amounts to the total networking time delay. Also called network latency. However, there is a lot more magic happening that will cause more delays in networks.

Routing

Now that we know what different parts of a network link cause delays we can start to understand where the networking delays come from. And start to look at some numbers.

If we would have a network that would only have one link our delays would be minimal and barely measurable. However in current network infrastructures a data packet does not travel over one link but actually hops over a lot of different transmission nodes. Each of these nodes will have their own set of propagation, transmission, queuing and processing delays. So you can imagine that if for one node our delay would be a couple of milliseconds, a transmission from your home laptop in France to a server in the United States and back, hopping over many nodes, would cause significant delays. So lets measure this.

To get an insight in the delays in your network from home you can simply use the ping command in your terminal. The commands in this article will work in terminals on Linux or Mac machines. For windows machines you can use powershell but the commands might differ a bit.

Try it for yourself.

First we will measure the round trip delay from your terminal to your local network interface, its address is localhost or 127.0.0.1:

ping -c 5 localhost PING localhost (127.0.0.1) 56(84) bytes of data. 64 bytes from localhost (127.0.0.1): icmp_seq=1 ttl=64 time=0.067 ms 64 bytes from localhost (127.0.0.1): icmp_seq=2 ttl=64 time=0.058 ms 64 bytes from localhost (127.0.0.1): icmp_seq=3 ttl=64 time=0.020 ms 64 bytes from localhost (127.0.0.1): icmp_seq=4 ttl=64 time=0.057 ms 64 bytes from localhost (127.0.0.1): icmp_seq=5 ttl=64 time=0.058 ms --- localhost ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4073ms rtt min/avg/max/mdev = 0.020/0.052/0.067/0.016 ms

You can see that the round trip time delays are very minimal. Now we can go a little further and see the delays between our machine and our local network router. You will see that as the distance increases, and the number of transmission nodes increase, the time delay will increase as well. Find your router’s IP address, it is often 192.168.0.1 or 192.168.1.1 (you can check here to find your IP addresses https://www.howtogeek.com/117371/how-to-find-your-computers-private-public-ip-addresses/):

ping -c 5 192.168.0.1 PING 192.168.0.1 (192.168.0.1) 56(84) bytes of data. 64 bytes from 192.168.0.1: icmp_seq=1 ttl=64 time=3.58 ms 64 bytes from 192.168.0.1: icmp_seq=2 ttl=64 time=3.74 ms 64 bytes from 192.168.0.1: icmp_seq=3 ttl=64 time=2.16 ms 64 bytes from 192.168.0.1: icmp_seq=4 ttl=64 time=1.91 ms 64 bytes from 192.168.0.1: icmp_seq=5 ttl=64 time=2.48 ms --- 192.168.1.254 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4006ms rtt min/avg/max/mdev = 1.912/2.773/3.736/0.746 ms

To see even longer delays try to ping an address that is much further away and requires more hops. For example google.com, or try addresses that you know are very far away:

ping -c 5 google.com PING google.com(par21s03-in-x0e.1e100.net (2a00:1450:4007:810::200e)) 56 data bytes 64 bytes from par21s03-in-x0e.1e100.net (2a00:1450:4007:810::200e): icmp_seq=1 ttl=120 time=3.09 ms 64 bytes from par21s03-in-x0e.1e100.net (2a00:1450:4007:810::200e): icmp_seq=2 ttl=120 time=5.48 ms 64 bytes from par21s03-in-x0e.1e100.net (2a00:1450:4007:810::200e): icmp_seq=3 ttl=120 time=3.17 ms 64 bytes from par21s03-in-x0e.1e100.net (2a00:1450:4007:810::200e): icmp_seq=4 ttl=120 time=4.96 ms 64 bytes from par21s03-in-x0e.1e100.net (2a00:1450:4007:810::200e): icmp_seq=5 ttl=120 time=5.77 ms --- google.com ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4006ms rtt min/avg/max/mdev = 3.091/4.492/5.769/1.142 ms

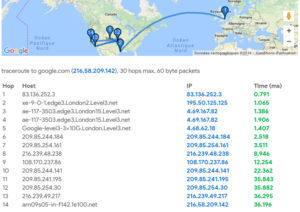

In addition of measuring the delay between our machine and some destination on the internet, we can also see all the different steps that our data packet takes over the network. You can try the following from your local machine, first we check the route to our local router, then we can check the route to a website on the internet. You will see that only one hop is necessary to get to your local router. That is why the delay is lower, here the delay will be mostly due to the wireless connection if you are on WiFi. But many more hops are required to get to a website on the internet.

traceroute 192.168.0.1 traceroute to 192.168.0.1 (192.168.0.1), 30 hops max, 60 byte packets 1 _gateway (192.168.0.1) 4.309 ms 4.277 ms 4.265 ms traceroute google.com traceroute to google.com (216.58.201.238), 30 hops max, 60 byte packets 1 _gateway (192.168.0.1) 2.342 ms 2.251 ms 2.203 ms 2 78.192.XX.XXX (78.192.XX.XXX) 7.211 ms 7.165 ms 7.121 ms 3 78.255.XX.XXX (78.255.XX.XXX) 4.567 ms 4.523 ms 5.998 ms 4 mil75-49m-1-v902.intf.nro.proxad.net (78.254.253.33) 4.736 ms 4.674 ms 5.002 ms 5 rhi75-49m-1-v904.intf.nro.proxad.net (78.254.252.134) 4.558 ms 4.896 ms 4.852 ms 6 fes75-ncs540-1-be3.intf.nro.proxad.net (78.254.242.206) 4.826 ms 2.897 ms 2.813 ms 7 p13-9k-3-be2003.intf.nro.proxad.net (78.254.242.45) 2.724 ms 4.220 ms 4.140 ms 8 194.149.165.209 (194.149.165.209) 2.895 ms 2.850 ms 2.805 ms 9 * * * 10 * * * 11 be2151.agr21.par04.atlas.cogentco.com (154.54.61.34) 2.542 ms 3.688 ms 3.030 ms 12 tata.par04.atlas.cogentco.com (130.117.15.70) 11.159 ms 11.111 ms 10.887 ms 13 72.14.212.77 (72.14.212.77) 3.838 ms 3.789 ms 3.744 ms 14 108.170.244.161 (108.170.244.161) 3.764 ms 3.696 ms 108.170.244.225 (108.170.244.225) 4.880 ms 15 216.239.48.27 (216.239.48.27) 3.543 ms 3.277 ms 3.198 ms 16 par10s33-in-f14.1e100.net (216.58.201.238) 3.074 ms 3.032 ms 2.984 ms

To go even further in our discovery of networking delays we will investigate two more aspects that contribute to the total delay. These aspects need to be considered and are often solved in data communication implementations. But to compensate for these aspects, the queuing and processing delays in our data transmission nodes will increase even more.

Packet Loss

Sometimes when we send data across a network it gets lost while travelling. This is called packet loss, when a packet that is send never arrives at its destination. Packet loss occurs for 2 different reasons.

The first cause for packet losses are errors in the transmission of data. This occurs more often on wireless transmission links than wired links. But can also occur in transmission switches due to processing errors.

The second cause for packet loss is called bandwidth congestion. This happens when the amount of data that is transferred over a transmission node is greater than the capacity of this node. The buffer will increase and when a certain threshold is reached the transmission node will start dropping data packets.

When the amount of packet loss increases the risk for data corruption increases as well. This is especially harmful in transmissions where data that is being sent has to be split in a lot of packets due to its size. For example when streaming video. When too much packets are lost the receiving process cannot get all the data required to perform the decoding of the video stream. Thus the video data will be corrupted and lost.

Lets see this packet loss in action. We can use the ping command for this and increase the interval between each ping packet and decrease the time we wait for a response. You will need super user rights for this command.

Be aware that you will need to find a route that is unable to cope with your request to see packet loss. Google will cope easily (they are designed for very high traffic) so try another website.

I tried github.com. Be aware that if you ping the same website a large amount of times, they will not be happy and your IP address could get banned. If you do not see any packet loss, then your connection is too stable and not enough traffic is occurring on your network for bandwidth congestion. What you can try to do is start a high quality video stream on YouTube and rerun the ping command. If you are on WiFi you can try to put your PC that is connected to WiFi far away from the router.

PS: On macOS replace the comma’s with dots for the -i and -w values in the command below.

sudo ping -c 1000 -i 0,01 -W 0,02 github.com ... ... --- github.com ping statistics --- 1000 packets transmitted, 996 received, 0,4% packet loss, time 14249ms rtt min/avg/max/mdev = 16.164/17.806/29.675/1.289 ms, pipe 3

Packet loss can be prevented by using a TCP transmission instead of a UDP transmission. A TCP link will detect packets that are lost and re-transmit them. However, re-transmitting lost data packets will increase the networking delays. Our sending node will have to wait on confirmation for the destination node. We will dive more into the details of this aspect in the next article.

Jitter

Jitter, also called packet delay variation, is a direct consequence of delays in the network, especially variable delays. The previously mentioned aspects of data transmission delay are not constant in a network. They depend on a lot of factors. So as you can imagine the delay between the sending of a data packet and its arrival is variable.

With this variable delay in mind we can quickly see that when we send a set of data packets in a particular order, the variable delays will cause the data packets to arrive in a different order (see image below). This in term will have the same effect as packet loss. Even if data is not lost it is still corrupted as the different data packets can not be put together in the correct order.

To measure jitter on your own you will need two different machines. We will perform a network test with iPerf to measure the jitter between our 2 machines (you can also see bandwidth performance and packet loss with this command, to install IPerf, see https://iperf.fr/iperf-download.php).

Please try to use these commands on two machines who are connected on the same network. With machine 1 named the server that has its firewall temporarily turned off. Otherwise port forwarding and firewall rules must be configured to make this work.

On machine 1, called the server:

iperf -s -u -i 1 ------------------------------------------------------------ Server listening on UDP port 5001 Receiving 1470 byte datagrams UDP buffer size: 208 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.1.44 port 5001 connected with 192.168.1.13 port 52192 [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 0.0- 1.0 sec 11.5 MBytes 96.5 Mbits/sec 1.715 ms 5/ 8209 (0.061%) [ 3] 1.0- 2.0 sec 13.6 MBytes 114 Mbits/sec 0.166 ms 2/ 9681 (0.021%) [ 3] 2.0- 3.0 sec 12.5 MBytes 105 Mbits/sec 0.229 ms 2/ 8900 (0.022%) [ 3] 3.0- 4.0 sec 12.2 MBytes 102 Mbits/sec 1.366 ms 0/ 8680 (0%) [ 3] 4.0- 5.0 sec 12.8 MBytes 107 Mbits/sec 0.336 ms 54/ 9180 (0.59%) [ 3] 5.0- 6.0 sec 12.4 MBytes 104 Mbits/sec 0.230 ms 45/ 8909 (0.51%) [ 3] 6.0- 7.0 sec 12.5 MBytes 105 Mbits/sec 0.184 ms 0/ 8920 (0%) [ 3] 7.0- 8.0 sec 12.5 MBytes 105 Mbits/sec 0.282 ms 0/ 8928 (0%) [ 3] 8.0- 9.0 sec 12.5 MBytes 105 Mbits/sec 0.492 ms 0/ 8912 (0%) [ 3] 0.0-10.0 sec 125 MBytes 105 Mbits/sec 0.104 ms 108/89166 (0.12%)

On machine 2, called the client (find the IP address of your machine 1, the server):

iperf -c 192.168.1.44 -u -b 100M ------------------------------------------------------------ Client connecting to 192.168.1.44, UDP port 5001 Sending 1470 byte datagrams, IPG target: 112.15 us (kalman adjust) UDP buffer size: 9.00 KByte (default) ------------------------------------------------------------ [ 4] local 192.168.1.13 port 52192 connected with 192.168.1.44 port 5001 [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 4] 0.0-10.0 sec 125 MBytes 105 Mbits/sec [ 4] Sent 89166 datagrams [ 4] Server Report: [ 4] 0.0-10.0 sec 125 MBytes 105 Mbits/sec 0.104 ms 108/89166 (0.12%)

Jitter can be solved by creating a buffer of data packets on our arrival node that will reorder them based on their sender timestamps. However, this will increase the queuing delay. And thus increase the networking delays even more. More about this subject in our next article.

What to do about it?

Now that we understand what elements of a network cause delay we can start to look at the different solutions to minimize it.

In our next article we will look into why we need to minimize it for certain communications, and how we can achieve a minimal delay in our network communications. We will dive into solving network delays for video streaming applications. And we will provide you with some practical examples that you can test on your own.

Thank you for reading!

Sources

Delays in Computer Network: https://www.geeksforgeeks.org/delays-in-computer-network/ (29–01–2021)

IP address lookup: https://www.howtogeek.com/117371/how-to-find-your-computers-private-public-ip-addresses/ (29–01–2021)

iPerf: https://iperf.fr/